Trustworthy AI

The 7 criteria of trustworthy AI were proposed in 2019 by the High-Level Expert Group on Artificial Intelligence (HLEG), mandated by the European Commission.

👉 Their goal: to define guidelines ensuring that AI is legally, ethically, and technically reliable.

The 7 criteria

- Human oversight: AI must remain under human control, without uncontrolled autonomy.

- Robustness and safety: resilience against errors, attacks, and failures.

- Privacy and data governance: responsible and secure management of personal data.

- Transparency: explainability of decisions, traceability of processes.

- Diversity, non-discrimination, and fairness: avoiding bias and ensuring inclusiveness.

- Societal and environmental well-being: contributing positively to society and minimizing ecological impact.

- Accountability: existence of redress and audit mechanisms in case of issues.

⚖️ These principles are not legal obligations like in the AI Act, but an ethical framework that strongly inspired the European regulation and the ALTAI checklist (a practical assessment tool).

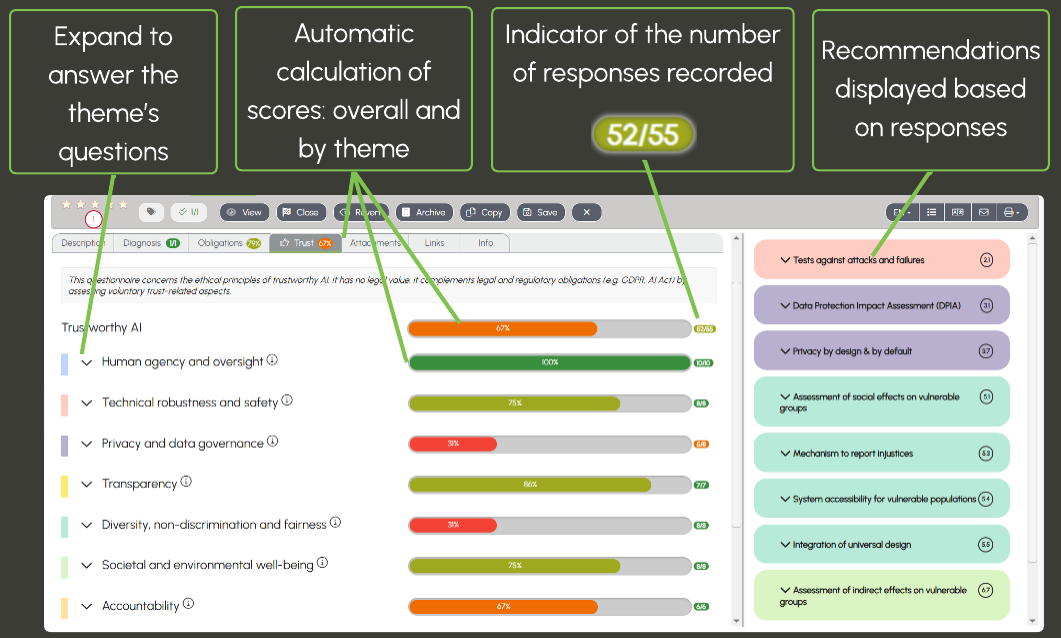

Trust tab

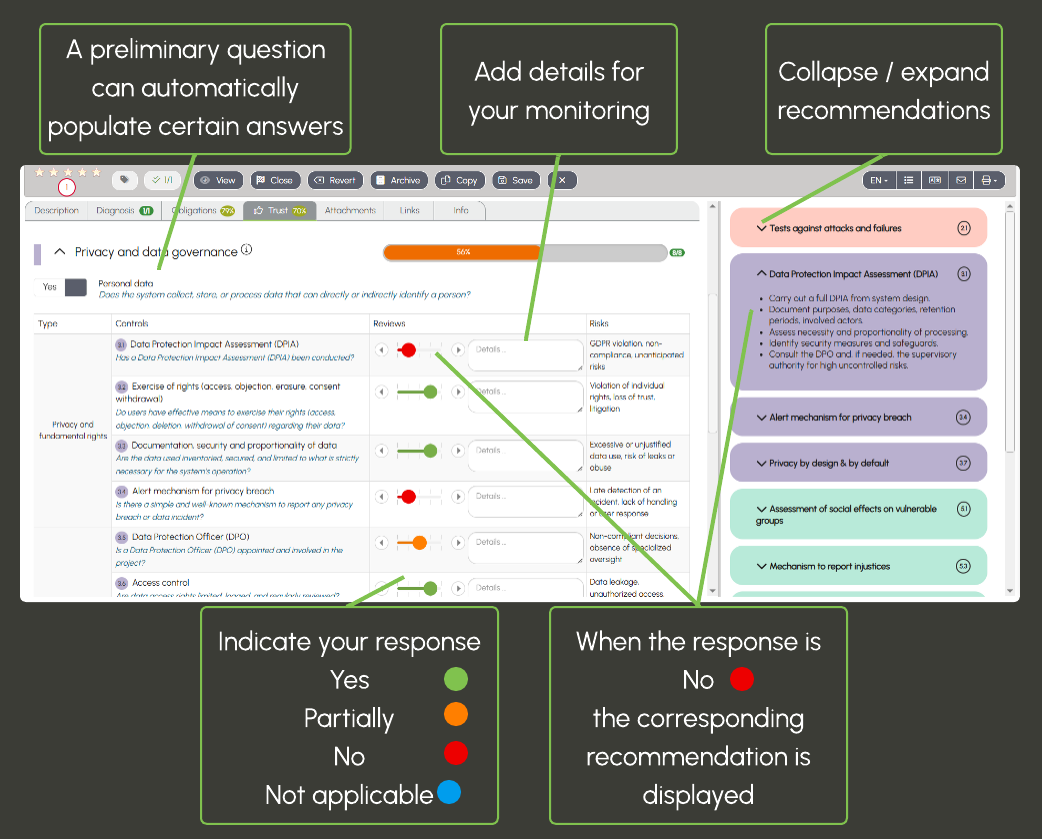

On the left, the form provides 6 to 10 questions per theme (55 in total). The scores, both overall and by theme, are calculated automatically as you go. On the right, targeted recommendations appear for each negative response.

Some themes begin with a preliminary question that can automatically populate the questionnaire. For example: if the system does not process any personal data, then all the answers in the Privacy & data governance theme are automatically set to Not applicable.

💡From the Links tab, you can create activities

related to the AI System form